Time Series — From Analyzing the Past to Predicting the Future

Time Series — From Analyzing the Past to Predicting the Future

How to learn from the past with time series.

If you are interested in making forecasts about a future outcome, time series is the tool for it. Time series data is a collection of observations recorded at specific intervals, such as an hourly weather report, or a daily inventory and sales report. Time series provide us with tools to understand historical data and use them to forecast the future with more certainty. For example, I am personally grateful to all those scientists who created the weather forecast app through the study of time series and businesses such as Amazon that rely on their sales forecast to better place inventory so that I can receive my Amazon order as soon as possible!

There admittedly are a number of time series tutorials available online but I still decided to write this post because of the following contributions:

- Build Foundation: Instead of assuming reader’s familiarity with time series, we will go deeper into introducing the foundational concepts related to the time series to better understand the topic and follow along.

- Build Breadth: Once readers are familiar with the foundational knowledge necessary to appreciate time series, we then introduce 10 different time series forecasting methods that can be used in various scenarios.

- Organization: Reading through 10 different ways of doing the same thing can become tedious. In order to better follow the flow of the post, the models are categorized and get more and more complex as we move forward, starting from univariate and multivariate statistical models and then moving to machine learning and deep learning approaches.

- Code: Models are implemented in Python and visualized to provide the readers with hands-on experience of using the models and seeing the results.

- Reference Table: Last contribution but surely not the least is the comparative table that I prepared and included right after this paragraph. Once you go through this post, you will be familiar with the models but we all forget the details. The purpose of this table is to be used as a reference that you can go back to in the future to find the right model to use for your use case.

With the introductions out of the way, let’s get started!

1. Time Series Basics

Before getting into forecasting, which admittedly is the fun part of time series, we need to cover some concepts to better understand the space, as follows:

- Trend: Similar to how we use “trend” in day-to-day conversations, trend in the context of time series also refers to the directional increase or decrease of the data over time. For example, if we record the height of an individual from age of 3 to 18 annually, we will see an overall increasing trend in the data. If we continue recording the height through the age of, let’s say 50, we will see that the increase or inclining trend continues up to a certain age and then once growth slows down and eventually stops, the height almost flattens. These are examples of trend in a time series.

- Seasonality: As the name suggests, refers to regular patterns in the underlying data during a specific period of time. For example, if we look at monthly weather data for Seattle over the past 10 years, we will see how the temperatures tend to increase during spring and summer time and then decrease during fall and winter months, which is repeated annually — this is a seasonality.

- Cyclicality: This one is similar to seasonality but it does not happen in a fixed schedule. For example, economic business cycles do not follow and repeat a regular time line, which makes them harder to forecast.

- Noise: Noise or residuals represent irregular fluctuations that do not follow a pattern. For the more statistically-inclined readers, noise is the random variation in the data, which makes predictions challenging. For example, a sudden surge in the sales data due to a one-day promotion is considered noise. We usually remove noise from the data to better understand the underlying patterns.

Now that we covered the basic concepts, let’s visualize some of them for a better understanding, in the code block below. I have added comments in the code block to make it easier to follow along.

# import libraries

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from statsmodels.tsa.seasonal import seasonal_decompose

# create a synthetic dataset

dates = pd.date_range(start='2000-01-01', periods=240, freq='M')

trend = np.linspace(10, 50, 240)

seasonal = 10 * np.sin(2 * np.pi * dates.month / 12)

noise = np.random.normal(0, 2, 240)

# combine components to create the synthetic time series

data = trend + seasonal + noise

df = pd.DataFrame({'value': data}, index=dates)

# cecompose time series

result = seasonal_decompose(df['value'], model='additive', period=12)

# visualize

plt.figure(figsize=(14, 10))

# original time series

plt.subplot(4, 1, 1)

plt.plot(df['value'], label='Original Time Series')

plt.legend(loc='upper left')

plt.title('Original Time Series')

# trend

plt.subplot(4, 1, 2)

plt.plot(result.trend, label='Trend', color='green')

plt.legend(loc='upper left')

plt.title('Trend')

# seasonality

plt.subplot(4, 1, 3)

plt.plot(result.seasonal, label='Seasonality', color='orange')

plt.legend(loc='upper left')

plt.title('Seasonality')

# residual/noise

plt.subplot(4, 1, 4)

plt.plot(result.resid, label='Residuals (Noise)', color='red')

plt.legend(loc='upper left')

plt.title('Residuals (Noise)')

plt.tight_layout()

plt.show()

Results:

Starting from the top, the first graph visualizes the overall time series that we created for this exercise. This one has all the combined components of trend, seasonality and residuals (noise). In the “original time series” graph in blue, we can see that overall the series has an increasing trend, which is confirmed in the second graph named “trend” in green. Looking back at the first graph of the “original time series” in blue, we can also tell there is a seasonality to the series, which is confirmed in the “seasonality” graph in yellow. And finally, the graph in the bottom in red demonstrates the “noise” or the “residuals”.

This was a simple example where we could tell there is a clear trend and seasonality to our data, but what if we cannot easily tell what is going on in the data? That brings us to the next part of the post, which I will call the exploratory data analysis, where we can explore the data to glean the underlying patterns.

2. Exploratory Data Analysis in Time Series

Exploratory data analysis in the context of time series is the process of analyzing the data set to uncover features such as trends, seasonality and cyclic components. Remember that the goal of understanding a time series is to make better forecasts and exploratory data analysis is one essential step towards that direction. I will cover some of the most common methods used in exploratory data analysis for time series below:

- Time Series Decomposition: As the name suggests, this method decomposes the time series into its components of trend, seasonality and residuals/noise. In fact we have encountered this before in our example above — I used the “statsmodels” library in the example above to decompose the time series. There are two ways of decomposing — one is called “additive” and the other is called “multiplicative”. Additive models assume that the components of a time series are added together (i.e. time series’ values = trend + seasonality + residuals), while the multiplicative models assume that the components are multiplied (i.e. time series’s values = trend * seasonality * residuals). As a general rule, if the magnitude of the seasonal fluctuations remains roughly constant over time, we use an “additive” model and if the magnitude of the seasonal fluctuations changes proportionally with the trend, we use a “multiplicative” model. Visualization can be helpful in determining which approach to take and in practice, sometimes we just use both approaches to see which decomposition better fits the time series so do not be shy to try both.

- Stationary: This is another frequently-used word when you read about time series, because many of the time series forecasting models assume the time series is stationary. Stationary means that the statistical properties of the time series, such as mean variance and autocovariance, remain constant over time. Despite the intangible definition, there are easy tests to perform to determine whether a time series is stationary or not, such as augmented Dickey-Fuller (ADF). If a data set is determined to be non-stationary, there are transformations available to make them stationary.

- Autocorrelation and Partial Autocorrelation: Remember that the goal is to make better predictions using a time series and therefore correlation among data points can be helpful. Autocorrelation is a measure of the correlation between a time series and its previous data points (i.e. lagged values), which is an indication of how present values are related to past values at a given time lag. An intuitive example here is that the temperature today is impacted by the temperature of yesterday, which is a lagged value for today’s temperature. Partial autocorrelation does the same thing but controls for shorter lags. For example if we decide to ignore the two past entries and only see the correlation between the current entry and the third past entry, then that is a partial correlation (since we skipped the two past entries).

There are also other ways to perform exploratory analysis, such as outlier detection, data visualization and feature analysis but for this post, we only rely on these most frequently used topics covered above.

We have been talking quite a bit about forecasting and ways to improve that so in the next section, we are going to talk about various time series forecasting methods!

3. Time Series Forecasting Methods

Time series forecasting is a vast area of statistics and data science and therefore it is easier to group these approaches together and the study them more closely. I will break down these approaches by their underlying architecture and then we will dive deeper into each approach and implement examples to better understand them. The breakdown is below and numbering corresponds to the sub-section of the post, in case you’d like to jump ahead to a specific approach — for example, if you are interested in reading more about long short-term memory (LSTM), just jump to subsection 4.1. of this section, which would be 3.4.1.

1. Univariate Statistical Methods

1.1. Simple Exponential Smoothing

1.2. Holt’s Linear Trend

1.3. Holt-Winters Seasonal

1.4. AutoRegressive Integrated Moving Average (ARIMA)

1.5. Seasonal AutoRegressive Integrated Moving Average (SARIMA)

2. Multivariate Statistical Methods

2.1. Vector Autoregressive (VAR)

3. Machine Learning Approaches

3.1. Random Forest

3.2. Extreme Gradient Boosting (XGBoost)

4. Deep Learning Methods

4.1. Long Short-Term Memory (LSTM)

4.2 Gated Recurrent Unit (GRU)

3.1. Univariate Statistical Methods

These are statistical methods that only consider a single variable to describe the underlying patterns within a data set and are hence called univariate. In this post, we will cover two of the more common univariate approaches used for forecasting in time series, called (1) exponential smoothing and (2) Autoregressive integrated moving average (ARIMA) models.

3.1.1. Exponential Smoothing

These methods forecast future values in a time series by assigning exponentially decreasing weights to past observations. Let’s cover three of the most common exponential smoothing methods:

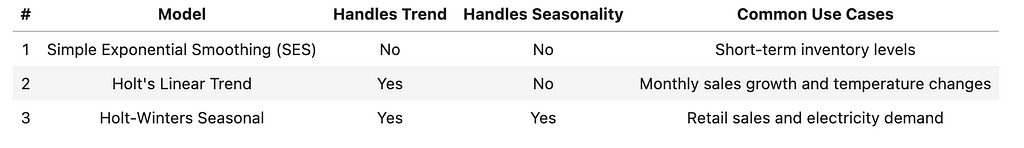

- Simple Exponential Smoothing (SES) is mostly suitable for time series data without trend or seasonality.

- Holt’s Linear Trend, as the name suggests, is suitable for time series data with a linear trend.

- Holt-Winters Seasonal method is suitable for time series data sets with both trend and seasonality.

As you can see, between the three above, we should be able to choose the appropriate approach to take. I have included the summary of these three in the table below for our future reference.

Let’s look at an example to better understand this.

# import libraries

import pandas as pd

import numpy as np

from statsmodels.tsa.holtwinters import SimpleExpSmoothing, ExponentialSmoothing

import matplotlib.pyplot as plt

# random data set

data = np.random.rand(100)

df = pd.DataFrame(data, columns=["Value"])

# visualize

plt.plot(df['Value'])

plt.show()

Results:

I created the random dataset above and as we can tell by the visualization of it, there might be some seasonality and/or trend in there. Since we suspect that, let’s use the Holt-Winters seasonal approach to make some forecasts.

# assuming seasonality, adjust the frequency

season_length = 12 # this results in something like monthly data with yearly seasonality

# fit Holt-Winters Seasonal model

model = ExponentialSmoothing(df['Value'], trend='add', seasonal='add', seasonal_periods=season_length)

fit = model.fit()

# forecast the next 12 points (one full season)

forecast = fit.forecast(12)

# visualize

plt.plot(df['Value'], label='Original Data')

plt.plot(np.arange(len(df), len(df) + 12), forecast, label='Forecast', color='red')

plt.legend()

plt.show()

Results:

It is generating some forecasts in red! It is hard to tell whether these are good or bad, we will come to that, but at least the pattern seems to follow the data. Let’s look at what would have happened if we used, let’s say Holt’s linear trend. We know this is not a good approach since it ignores seasonality and our data seems to demonstrate evidence of seasonality and as a result, we expect the forecast to be worse than the previous approach. Let’s find out!

# fit Holt's Linear Trend model

model = ExponentialSmoothing(df['Value'], trend='add', seasonal=None)

fit = model.fit()

# forecast next 10 points

forecast = fit.forecast(10)

# visualize

plt.plot(df['Value'], label='Original Data')

plt.plot(np.arange(len(df), len(df) + 10), forecast, label='Forecast', color='red')

plt.legend()

plt.show()

Results:

The forecast in red looks just like a straight line, which does not really follow the same pattern as the blue parts of the graph that are our original data points. As expected, this is a worse forecast compared to Holt-Winters seasonal method, given it ignores seasonality.

Let’s move to ARIMA models next, with the hope of getting better results.

3.1.2. ARIMA Models

Autoregressive integrated moving average (ARIMA) models are a family of models that capture a variety of time series patterns, including trends and seasonality, depending on the type of the model used. As a general overview, the “AR” part of ARIMA refers to autoregressive, meaning it relates current values of a time series to the past values. The “I” part of ARIMA stands for integrated, referring to differencing, which makes the time series stationary. Then the “MA” part of ARIMA stands for moving average, which means these algorithms model the error term as a linear combination of error terms at various times in the past. Another variation of ARIMA, which accounts for seasonal effects is called the seasonal ARIMA or SARIMA and as the name suggests is useful when seasonality exists.

I have implemented ARIMA and SARIMA in the post below so I will not cover them here but I wanted to at least provide an overview of them in this post, since they are some of the most common models used in time series forecasting.

Time Series — ARIMA vs. SARIMA vs. LSTM: Hands-On Tutorial

Now that we have covered the univariate models, we will move to the multivariate models used in forecasting time series.

3.2. Multivariate Statistical Models — Vector Autoregresssion (VAR)

Definition of VAR sounds complicated but let me go over it and then we will walk through it in an example to better understand it. VAR is a statistical model that can capture the linear interdependencies among multiple time series. There are multiple time series involved, which makes this is a multivariate approach. VAR essentially models each variable as a linear function of past values of itself and other variables in the system. Given this approach, it is an excellent tool in understanding the dynamic relationships among variables, since it analyzes how each variable is influenced by its own lagged values and the lagged values of other variables.

Note that going forward, we will use the open source air quality data set from UC Irvine machine learning depository, available under a CC BY 4.0 license.

In order to quantitatively measure the performance of the forecasting models going forward, we will be using . Root mean squared error (RMSE), which calculates as the square root of the average of the squared distances between actuals and the predictions, formulated below. Since this is an error, we would like to minimize this and therefore the lower RMSE, the better forecast fits the actuals and hence the lower the RMSE, the better the forecasting model.

Let’s implement VAR and look at the forecasts. I have added comments in the code to make it easier to follow.

# import libraries

import pandas as pd

from statsmodels.tsa.api import VAR

import matplotlib.pyplot as plt

from sklearn.metrics import mean_squared_error

import numpy as np

# load the dataset

file_path = './AirQualityUCI.csv'

data = pd.read_csv(file_path, delimiter=';')

# clean numeric columns

columns_to_clean = ['C6H6(GT)', 'T', 'RH', 'AH']

# replace commas with dots and convert them to numeric

for col in columns_to_clean:

data[col] = data[col].str.replace(',', '.')

data[col] = pd.to_numeric(data[col], errors='coerce')

# drop the unnamed columns and any rows with missing values

data_cleaned = data.drop(columns=['Unnamed: 15', 'Unnamed: 16']).dropna()

# convert 'Date' and 'Time' to a single DateTime index

data_cleaned['DateTime'] = pd.to_datetime(data['Date'] + ' ' + data['Time'], format='%d/%m/%Y %H.%M.%S')

data_cleaned = data_cleaned.set_index('DateTime')

# drop the 'Date' and 'Time' columns

data_cleaned = data_cleaned.drop(columns=['Date', 'Time'])

# ensure all columns are numeric

data_cleaned = data_cleaned.apply(pd.to_numeric, errors='coerce')

# drop rows with NaN

data_cleaned = data_cleaned.dropna()

# split the data (80% train, 20% test)

train_size = int(len(data_cleaned) * 0.8)

train_data = data_cleaned[:train_size]

test_data = data_cleaned[train_size:]

# fit VAR

model = VAR(train_data)

fitted_model = model.fit()

# forecast

lag_order = fitted_model.k_ar

forecast_steps = len(test_data)

forecast = fitted_model.forecast(train_data.values[-lag_order:], steps=forecast_steps)

# convert forecast into a dataframe for comparison with actuals

forecast_df = pd.DataFrame(forecast, index=test_data.index, columns=train_data.columns)

# evaluate

rmse = np.sqrt(mean_squared_error(test_data['NOx(GT)'], forecast_df['NOx(GT)']))

print(f'Root Mean Squared Error (RMSE): {rmse}')

# visualize

plt.figure(figsize=(12, 6))

plt.plot(test_data['NOx(GT)'], label='Actual')

plt.plot(forecast_df['NOx(GT)'], label='VAR Forecasted', linestyle='--')

plt.title('Actual vs Forecasted - VAR')

plt.xlabel('Date')

plt.ylabel('NOx')

plt.legend()

plt.show()

Results:

On the positive side, the model generated some forecasts but on the negative side, the forecasts are not really good. It looks like the model was unable to recognize the underlying pattern. The point here was to demonstrate how to implement this approach, rather than getting a good performance. We will implement other models and will see whether we can get better performance.

In order to get better performance, we will move on to machine learning approaches.

3.3. Machine Learning Approaches

When it comes to machine learning, there are many approaches that can help us with forecasting in time series, including various regression and decision tree approaches, such as random forest and XGBoost.

3.3.1. Random Forest

Random forest is a powerful and very popular machine learning algorithm that is used both for regression and classification tasks. We do not need to get into the details of how these models work for this post but it is good to know that random forest is based on the idea of ensemble learning. In ensemble learning, multiple models, such as decision trees in a random forest, are combined to produce better performance compared to a single model. Now that we are familiar with what random forests are, let’s implement it for our current problem.

We will use the same train and test set from previous example so that we can compare the performance.

# import libraries

from sklearn.ensemble import RandomForestRegressor

from sklearn.metrics import mean_squared_error

import matplotlib.pyplot as plt

import numpy as np

# create lagged features

def create_lagged_features(data, n_lags):

lagged_data = data.copy()

for i in range(1, n_lags + 1):

lagged_data[f'NOx_lag_{i}'] = lagged_data['NOx(GT)'].shift(i)

lagged_data = lagged_data.dropna()

return lagged_data

n_lags = 3

# apply lagged feature generation to both train and test data

train_data_lagged = create_lagged_features(train_data, n_lags)

test_data_lagged = create_lagged_features(test_data, n_lags)

# separate X and y

X_train = train_data_lagged.drop(columns=['NOx(GT)'])

y_train = train_data_lagged['NOx(GT)']

X_test = test_data_lagged.drop(columns=['NOx(GT)'])

y_test = test_data_lagged['NOx(GT)']

# train

rf_model = RandomForestRegressor(n_estimators=100, random_state=42)

rf_model.fit(X_train, y_train)

# forecast

y_pred = rf_model.predict(X_test)

# evaluate

rmse = np.sqrt(mean_squared_error(y_test, y_pred))

print(f'Root Mean Squared Error (RMSE): {rmse}')

# visualize

plt.figure(figsize=(12, 6))

plt.plot(y_test.index, y_test, label='Actual')

plt.plot(y_test.index, y_pred, label='Random Forest Forecasted', linestyle='--')

plt.title('Actual vs Forecasted - Random Forest')

plt.xlabel('Date')

plt.ylabel('NOx(GT)')

plt.legend()

plt.show()

Results:

That is quite interesting! As we can see the forecasted values follow the actuals quite closely! This is much better compared to what we observed using VAR. We could also see this quantitatively by comparing the root mean squared error that reduced from around 365 for VAR to as low as 132 for random forest. Let’s see how well XGBoost does next.

3.3.2. XGBoost

XGBoost stands for extreme gradient boosting. It is a gradient boosting decision tree type of a model, that can be used both for supervised regression and classification tasks. I have a separate post that goes deeper into what XGBoost is and how it is implemented step by step so I will not repeat those parts here (but we will implement it briefly below). Feel free to refer to this post if you are interested in learning more about XGBoost.

XGBoost: Intro, Step-by-Step Implementation, and Performance Comparison

For this post, we will use the same train and test set from previous example so that we can compare the performance.

# import libraries

from xgboost import XGBRegressor

from sklearn.metrics import mean_squared_error

import matplotlib.pyplot as plt

import numpy as np

# create lagged features for both train_data and test_data

def create_lagged_features(data, n_lags):

lagged_data = data.copy()

for i in range(1, n_lags + 1):

lagged_data[f'NOx_lag_{i}'] = lagged_data['NOx(GT)'].shift(i)

lagged_data = lagged_data.dropna()

return lagged_data

n_lags = 3

# apply lagged feature generation to train_data and test_data

train_data_lagged = create_lagged_features(train_data, n_lags)

test_data_lagged = create_lagged_features(test_data, n_lags)

# break down to X and y

X_train = train_data_lagged.drop(columns=['NOx(GT)'])

y_train = train_data_lagged['NOx(GT)']

X_test = test_data_lagged.drop(columns=['NOx(GT)'])

y_test = test_data_lagged['NOx(GT)']

# train

xgb_model = XGBRegressor(n_estimators=100, random_state=42)

xgb_model.fit(X_train, y_train)

# forecast

y_pred = xgb_model.predict(X_test)

# evaluate

rmse = np.sqrt(mean_squared_error(y_test, y_pred))

print(f'Root Mean Squared Error (RMSE): {rmse}')

# visualize

plt.figure(figsize=(12, 6))

plt.plot(y_test.index, y_test, label='Actual')

plt.plot(y_test.index, y_pred, label='XGBoost Forecasted', linestyle='--')

plt.title('Actual vs Forecasted - XGBoost')

plt.xlabel('Date')

plt.ylabel('NOx(GT)')

plt.legend()

plt.show()

Results:

Look at that! XGBoost seems to be performing even better than the previous random forest, since the RMSE is now down to 115 from 132 that we saw using random forest. We can also visually confirm that forecasted values closely follow the actuals.

The last type that we will cover in this post are the deep learning approaches!

3.4. Deep Learning Approaches

We are going to cover two of the most common deep learning approaches used for time series in this portion of the post, including long short-term memory (LSTM) and gated recurrent unit (GRU). We will implement both approaches for our existing probelm and then will discuss the trade-off of performance vs. required resources for the two.

3.4.1. Long Short-Term Memory (LSTM)

LSTMs are a type of recurrent neural network (RNN), which can remember the information in the past sequences of the data quite well — this makes them powerful tools in time series forecasting. For the purposes of this post, we do not need to understand what is happening inside these algorithms but let’s implement them and then compare the results to the models we previously covered.

# import libraries

import numpy as np

import pandas as pd

from sklearn.metrics import mean_squared_error

import matplotlib.pyplot as plt

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import LSTM, Dense

from tensorflow.keras.callbacks import EarlyStopping

# data preparation

def create_lagged_features_lstm(data, n_lags):

lagged_data = data.copy()

for i in range(1, n_lags + 1):

lagged_data[f'NOx_lag_{i}'] = lagged_data['NOx(GT)'].shift(i)

lagged_data = lagged_data.dropna()

return lagged_data

n_lags = 3

# apply lagged feature generation to train_data and test_data

train_data_lagged = create_lagged_features_lstm(train_data, n_lags)

test_data_lagged = create_lagged_features_lstm(test_data, n_lags)

# separate X and y

X_train_all_features = train_data_lagged.drop(columns=['NOx(GT)']).values # Use all features

y_train = train_data_lagged['NOx(GT)'].values

X_test_all_features = test_data_lagged.drop(columns=['NOx(GT)']).values

y_test = test_data_lagged['NOx(GT)'].values

# reshape data for LSTM (samples, timesteps, features)

X_train_lstm_all_features = X_train_all_features.reshape((X_train_all_features.shape[0], n_lags, X_train_all_features.shape[1] // n_lags))

X_test_lstm_all_features = X_test_all_features.reshape((X_test_all_features.shape[0], n_lags, X_test_all_features.shape[1] // n_lags))

# build the model

model_all_features = Sequential()

model_all_features.add(LSTM(50, activation='relu', input_shape=(n_lags, X_train_lstm_all_features.shape[2])))

model_all_features.add(Dense(1))

model_all_features.compile(optimizer='adam', loss='mse')

# add early stopping to prevent overfitting

early_stopping = EarlyStopping(monitor='val_loss', patience=10, restore_best_weights=True)

# train

history_all_features = model_all_features.fit(X_train_lstm_all_features, y_train, epochs=100, batch_size=32, validation_data=(X_test_lstm_all_features, y_test), callbacks=[early_stopping], verbose=1)

# forecast

y_pred_all_features = model_all_features.predict(X_test_lstm_all_features)

# evaluate

rmse_all_features = np.sqrt(mean_squared_error(y_test, y_pred_all_features))

print(f'Root Mean Squared Error (RMSE): {rmse_all_features}')

# visualize

plt.figure(figsize=(12, 6))

plt.plot(y_test, label='Actual')

plt.plot(y_pred_all_features, label='LSTM (All Features)', linestyle='--')

plt.title('Actual vs Forecasted - LSTM')

plt.xlabel('Time Step')

plt.ylabel('NOx(GT)')

plt.legend()

plt.show()

Results:

We can visually see that LSTM was able to follow the patterns of the data quite well but the RMSE is not as low as what we observed with XGBoost (149 vs. 115) — that said, it is one of the more powerful models we tried here. Let’s see how GRUs perform in comparison.

3.4.2. Gated Recurrent Unit (GRU)

GRUs are another type of recurrent neural networks, similar to LSTM but with a slightly simpler architecture under the hood. GRUs tend to perform well on sequential data such as time series and since they require less resources compared to LSTMs, they can be quite useful for certain problems.

The point here is that in comparison to LSTMs, we are sacrificing some of the performance in GRUs but we get to spend less computational resources on the problem. So the selection between the two can be a trade-off consideration between performance and resources.

# import libraries

import numpy as np

import pandas as pd

from sklearn.metrics import mean_squared_error

import matplotlib.pyplot as plt

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import GRU, Dense

from tensorflow.keras.callbacks import EarlyStopping

# data prep

def create_lagged_features_gru(data, n_lags):

lagged_data = data.copy()

for i in range(1, n_lags + 1):

lagged_data[f'NOx_lag_{i}'] = lagged_data['NOx(GT)'].shift(i)

lagged_data = lagged_data.dropna()

return lagged_data

n_lags = 3

# apply lagged feature generation to train and test

train_data_lagged = create_lagged_features_gru(train_data, n_lags)

test_data_lagged = create_lagged_features_gru(test_data, n_lags)

# separate out X and y

X_train_all_features = train_data_lagged.drop(columns=['NOx(GT)']).values

y_train = train_data_lagged['NOx(GT)'].values

X_test_all_features = test_data_lagged.drop(columns=['NOx(GT)']).values

y_test = test_data_lagged['NOx(GT)'].values

# reshape data for GRU (samples, timesteps, features)

X_train_gru_all_features = X_train_all_features.reshape((X_train_all_features.shape[0], n_lags, X_train_all_features.shape[1] // n_lags))

X_test_gru_all_features = X_test_all_features.reshape((X_test_all_features.shape[0], n_lags, X_test_all_features.shape[1] // n_lags))

# build GRU

model_gru_all_features = Sequential()

model_gru_all_features.add(GRU(50, activation='relu', input_shape=(n_lags, X_train_gru_all_features.shape[2]))) # Input shape is (timesteps, features)

model_gru_all_features.add(Dense(1)) # Output layer predicting one value (NOx(GT))

model_gru_all_features.compile(optimizer='adam', loss='mse')

# add early stopping to prevent overfitting

early_stopping = EarlyStopping(monitor='val_loss', patience=10, restore_best_weights=True)

# train

history_gru_all_features = model_gru_all_features.fit(X_train_gru_all_features, y_train, epochs=100, batch_size=32, validation_data=(X_test_gru_all_features, y_test), callbacks=[early_stopping], verbose=1)

# forecast

y_pred_gru_all_features = model_gru_all_features.predict(X_test_gru_all_features)

# evaluate

rmse_gru_all_features = np.sqrt(mean_squared_error(y_test, y_pred_gru_all_features))

print(f'Root Mean Squared Error (RMSE) for GRU (All Features): {rmse_gru_all_features}')

# visualize

plt.figure(figsize=(12, 6))

plt.plot(y_test, label='Actual')

plt.plot(y_pred_gru_all_features, label='GRU', linestyle='--')

plt.title('Actual vs Forecasted - GRU')

plt.xlabel('Time Step')

plt.ylabel('NOx(GT)')

plt.legend()

plt.show()

Results:

GRU did not disappoint either! Performance is quite good visually, although XGBoost remains undefeated for this exercise with an RMSE of 172 for GRU vs. 115 for XGBoost!

4. Conclusion

In this post, we took a deeper and wider dive into the real of time series and used them for forecasting. We went deeper by reviewing the foundational topics related to these approaches and then we went wider by reviewing 10 different approaches, grouped by their underlying architecture and implementing examples using some of them and discussing certain trade-offs involved.

Thanks For Reading!

If you found this post helpful, please follow me on Medium and subscribe to receive my latest posts!

(All images, unless otherwise noted, are by the author.)

Time Series — From Analyzing the Past to Predicting the Future was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

from Datascience in Towards Data Science on Medium https://ift.tt/VIJraPf

via IFTTT