Your Data Quality Checks Are Worth Less (Than You Think)

How to deliver outsized value on your data quality program

Over the last several years, data quality and observability have become hot topics. There is a huge array of solutions in the space (in no particular order, and certainly not exhaustive):

Regardless of their specific features, all of these tools have a similar goal: improve visibility of data quality issues, reduce the number of data incidents, and increase trust. Despite a lower barrier to entry, however, data quality programs remain difficult to implement successfully. I believe that there are three low-hanging fruit that can improve your outcomes. Let’s dive in!

Hint 1: Focus on process failures, not bad records (when you can)

For engineering-minded folks, it can be hard pill to swallow that some number of “bad” records will not only flow into your system but through your system, and that may be OK! Consider the following:

- Will the bad records flush out when corrected in the source system? If so, you may go to extraordinary lengths in your warehouse or lakehouse to correct data that is trivial for a source system operator to fix, with the result that your reporting is correct on the next refresh

- Is the dataset useful if it’s “directionally correct” in aggregate? CRM data is a classic example, since many fields need to be populated manually, and there’s a relatively high error rate compared to automated processes. Even if these errors aren’t corrected, as long as they’re not systemic, the dataset may still be useful

- Is accuracy of individual records extremely important? Financial reporting, operational reporting on sensor data from expensive machinery, and other “spot-critical” use cases deserve the time and effort needed to identify (and possibly isolate, remove, or remediate) bad records

If your data product can tolerate Type 1 or Type 2 issues, fantastic! You can save a lot of effort by focusing on detection and alerting of process failures rather than one-off or limited anomalies. You can measure high-level metrics skimmed from metadata, such as record counts, unique counts of key columns, and min / max values. A rogue process in your application or SaaS systems can generate too many or too few records, or perhaps a new enumerated value has been added to a column unexpectedly. Depending on your specific use cases, you may need to write custom tests (e. g., total revenue by date and market segment or region), so make sure to profile your data and common failure scenarios.

On the other hand, Type 3 issues require more complex systems and decisions. Do you move bad records to a dead-letter queue and send an alert for manual remediation? Do you build a self-healing process for well-understood data quality issues? Do you simply modify the record in some way to indicate the data quality issue so that downstream processes can decide how to handle the problem? These are all valid approaches, but they do require compute ($) and developer time ($$$$) to maintain.

Hint 2: Don’t duplicate your efforts

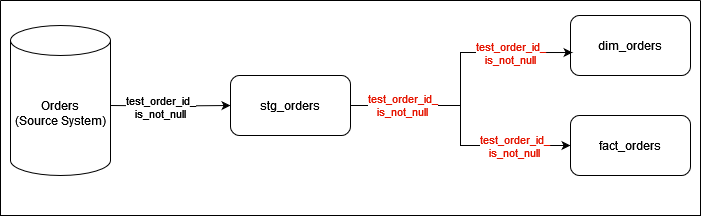

Long data pipelines with lots of transformation steps require a lot of testing to ensure data quality throughout, but don’t make the mistake of repeatedly testing the same data with the same tests. For example, you may be testing that an identifier is not null from a SaaS object or product event stream upon ingestion, and then your transform steps implement the same tests:

These kinds of duplicate tests can add to cloud spend and development costs, even though they provide no value. The tricky part is that even if you’re aware that duplication is a bad pattern, long and complex pipelines can make reasoning about all of their data quality tests difficult.

To my knowledge, there isn’t mature tooling available to visualize data quality lineage; just because an upstream source has a data quality test doesn’t necessarily mean that it will capture the same kinds of issues as a test in a downstream model. To that end, engineers need to be intentional about data quality monitors. You can’t just add a test to a dataset and call it a day; you need to think about the broader data quality ecosystem and what a test adds to it.

Hint 3: Avoid alert fatigue and focus on what matters

Perhaps one of the biggest risks to your data quality program isn’t too few data quality tests; it’s too many! Frequently, we build out massive suites of data quality monitors and alerts, only to find our teams overwhelmed. When everything’s important, nothing is.

If your team can’t act on an alert, whether because of an internal force like capacity constraints or an external force like poor data source quality, you probably shouldn’t have it in place. That’s not to say that you shouldn’t have visibility into these issues, but they can be reserved for reports on a less frequent basis, where they can be evaluated alongside more actionable alerts.

Likewise, on a regular basis, review alerts and pages, and ruthlessly cut the ones that weren’t actionable. Nobody’s winning awards for generating pages and tickets for issues that couldn’t be resolved, or whose resolution wasn’t worth an engineer’s time to address.

Conclusion

Data quality monitoring is an essential component of any modern data operation, and despite the plethora of tools, both open source and proprietary, it can be difficult to implement a successful program. You can spend a lot of time and energy on data quality without seeing results.

To summarize:

- When possible, focus on aggregated data rather than individual data points

- Only test the data once for the same data quality issue. Duplicate tests waste compute and developer time

- Ensure your alert volume doesn’t overwhelm your team. If they can’t act on all of the alerts in a reasonable amount of time, you either have to staff up, or you need to cut down on the alerts

All of that being said, the most important thing to remember is to focus on value. It can be difficult to quantify the value of your data quality program, but at the very least, you should have some reasonable thesis about your interventions. We know that frozen, broken, or inaccurate pipelines can cost significant amounts of developer, analyst, and business stakeholder time. For every check and monitor, think about how you are or aren’t moving the needle. A big impact doesn’t require a massive program, as long as you target the right problems.

Your Data Quality Checks Are Worth Less (Than You Think) was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

from Datascience in Towards Data Science on Medium https://ift.tt/nLaZyrK

via IFTTT